The What

A shader is a super fast computer program that runs in the graphical pipeline and decides what color to render each pixel on your screen. There are several variants of shaders which each have their quirks. While coloring pixels with colors sounds as interesting as watching paint dry we can do some amazing things with this ability.

Where does the name Shader come from?

This use of the term "shader" was coined by Pixar in version 3.0 of their RenderMan Interface Specification in 1988.

The Why

The first question is why write shaders at all? Why not just write a code in C# like we usually do? We could write a script in C# which runs on the pixels of the screen and updates the color of each pixel. So why shouldn't we do this?

The reason for this is our screens have very big resolutions, a retina display has a resolution of 2880x1800. That means that if we run at 60 frames per second we have 311,040,000 pixels to update each second. That's a lot. Our CPUs have around four processors which run in parallel and can each run several threads at the same time, but they can't handle anywhere near that many operations per second.

In contrast, our GPUs have lots and lots of tiny processors which are less powerful than the ones the CPU has but they can all run at the same time. On top of that, the GPU can accelerate math operations via hardware, instead of software which is much slower.

This is where Shaders shine, since shader code runs on the GPU, unlike C# code which runs on the CPU. The GPU is much more optimized for doing multiple operations at the same time. And since we need a lot of math to do impressive things with our shaders we get the added benefit of accelerated hardware math calculations.

The Languages

ShaderLab - is a declarative language that you use in shader source files. It uses a nested-braces syntax to describe a Shader object.

HLSL - In Unity shaders are written in High-Level Shading Language or HLSL for short. HLSL was developed by Microsoft for their Direct3D 9 API. Even though this is the language used in Unity it's good to know that there are other shader languages such as GLSL which was created for OpenGL, and others.

GLSL support has been added in Unity 2020 but is currently not recommended for use by unity.

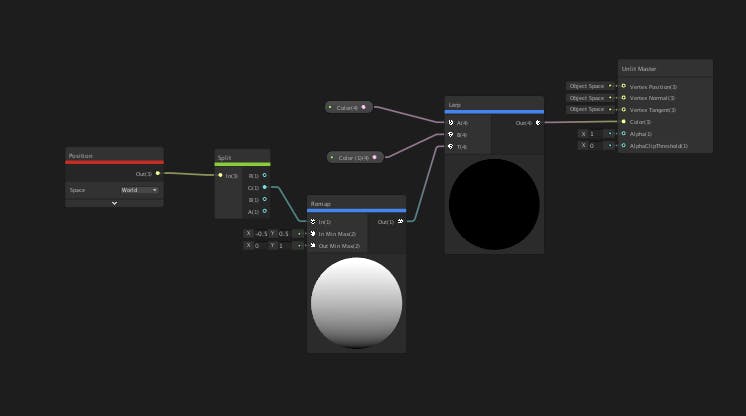

The Visual Language

In Unity 2019+ if you use the URP or HDRP you have the choice of using Shader Graph. Shader Graph is a visual scripting tool that lets you write shaders visually without complex syntax. While Shader Graph is missing features and cant replace writing shader in HLSL completely, it does make our lives much easier in kin to writing in C# and not Assembly. Under the hood, Shader Graph converts the Visual scripts of the shader to HLSL. It's just much easier to understand. I'll note that for best performance it's probably best to write directly in HLSL since Shader Graph auto generation does not necessarily create the most optimized HLSL code.

Shader Graph is not the only Visual Shader editor there are others like Shader Forge by Freya Holmer and Amplify Shader.

The Actors

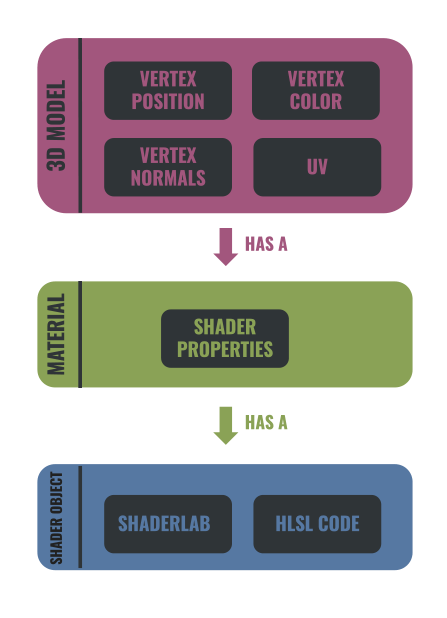

It's important to understand the relationship between the different actors involved in rendering things in Unity.

3D Model - First off we have a 3D model. Even when we have a sprite it still has a 3D model. There is no such thing as 2D in Unity, everything has 3 Axises, even sprites, and UI. A 3d model is a visual representation of an object, for example, a cube, which is comprised of a mesh of triangles that are made of vertices. Vertices are the points where two or more lines meet. So if we some it up a 3D Model is a collection of points that describe a 3 dimensional object.

The 3D model has this Data:

Vertex positions - All the world positions of every vertex which represent the model.

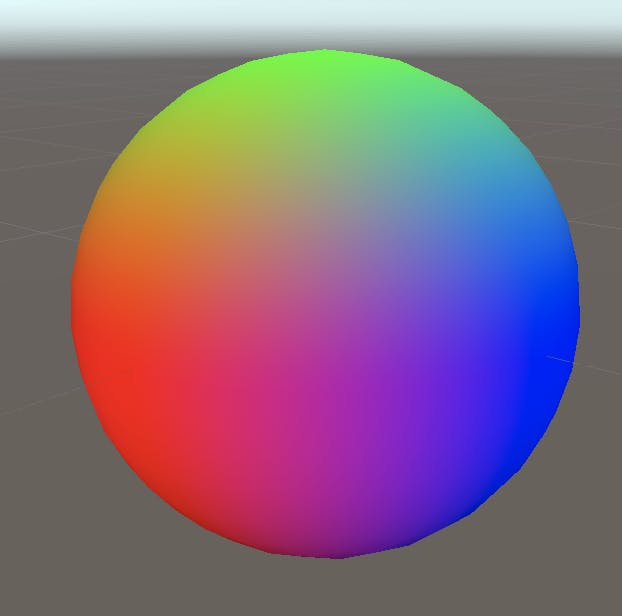

Vertex Normals - Each vertex has a normal which describes the vertex direction.

In this example we visualize the normal direction with color as we use the XYZ values as RGB, where the normal 0,1,0 will represent green, the normal 1,0,0 would be red, and so on.

UV Data - To project a 2D texture on the 3D object it has a mapping from each vertex to a location on the texture. The UV values range from (0,0) to (1,1).

Why Is it called UV Mapping?

The letters "U" and "V" denote the axes of the 2D texture because "X", "Y", and "Z" are already used to denote the axes of the 3D object in model space, while "W" (in addition to XYZ) is used in calculating quaternion rotations, a common operation in computer graphics.

In this example we visualize the normal direction with color as we use the XYZ values as RGB, where the normal 0,1,0 will represent green, the normal 1,0,0 would be red, and so on. Vertex Color - Vertex color is used when we don't want to map a texture to the Model but rather give each vertex a color. It is also used as a hack in shaders as a way to store data on each vertex.

Material - A materials relationship to a shader is a kin to a class and an object in OO programming. The material holds the shader parameters. A single shader can have multiple materials. Each 3d Model in unity has a material. Material properties can vary from textures to vectors depending on what is defined in the shader. Materials are assets, much like scriptable objects, when changed at runtime the changes persist after Unity returns to the editor from play mode.

Shader Object - A Shader object is a Unity-specific way of working with shader programs; it is a wrapper for shader programs and other information. It lets you define multiple shader programs in the same file, and tell Unity how to use them.

Here is an example of a simple shader in HLSL

Shader "Unlit/SimpleUnlitTexturedShader" { Properties { // we have removed support for texture tiling/offset, // so make them not be displayed in material inspector [NoScaleOffset] _MainTex ("Texture", 2D) = "white" {} } SubShader { Tags { "RenderType"="Opaque" } Pass { CGPROGRAM // use "vert" function as the vertex shader #pragma vertex vert // use "frag" function as the pixel (fragment) shader #pragma fragment frag // vertex shader inputs struct appdata { float4 vertex : POSITION; // vertex position float2 uv : TEXCOORD0; // texture coordinate }; // vertex shader outputs ("vertex to fragment") struct v2f { float2 uv : TEXCOORD0; // texture coordinate float4 vertex : SV_POSITION; // clip space position }; // vertex shader v2f vert (appdata v) { v2f o; // transform position to clip space // (multiply with model*view*projection matrix) o.vertex = mul(UNITY_MATRIX_MVP, v.vertex); // just pass the texture coordinate o.uv = v.uv; return o; } // texture we will sample sampler2D _MainTex; // pixel shader; returns low precision ("fixed4" type) // color ("SV_Target" semantic) fixed4 frag (v2f i) : SV_Target { // sample texture and return it fixed4 col = tex2D(_MainTex, i.uv); return col; } ENDCG } } }

Fun Fact - Shader code is compiled in run time, so you can change it while Unity is running and see the changes live.

The Anatomy Of a Shader Object

Properties - The properties of a shader are exposed in the shader's material. They can range from Booleans, vectors to textures, and several others which are all used in the shaders calculations.

While Transparent geometry, anything between 2501 - 5000 is rendered back to front, this is to get proper blending. I suggest reading up on the painter’s algorithm.

Sub Shaders - A shader can contain one or more sub-shaders. Each sub shader lets you define different GPU settings and shader programs for different hardware, render pipelines, and runtime settings.

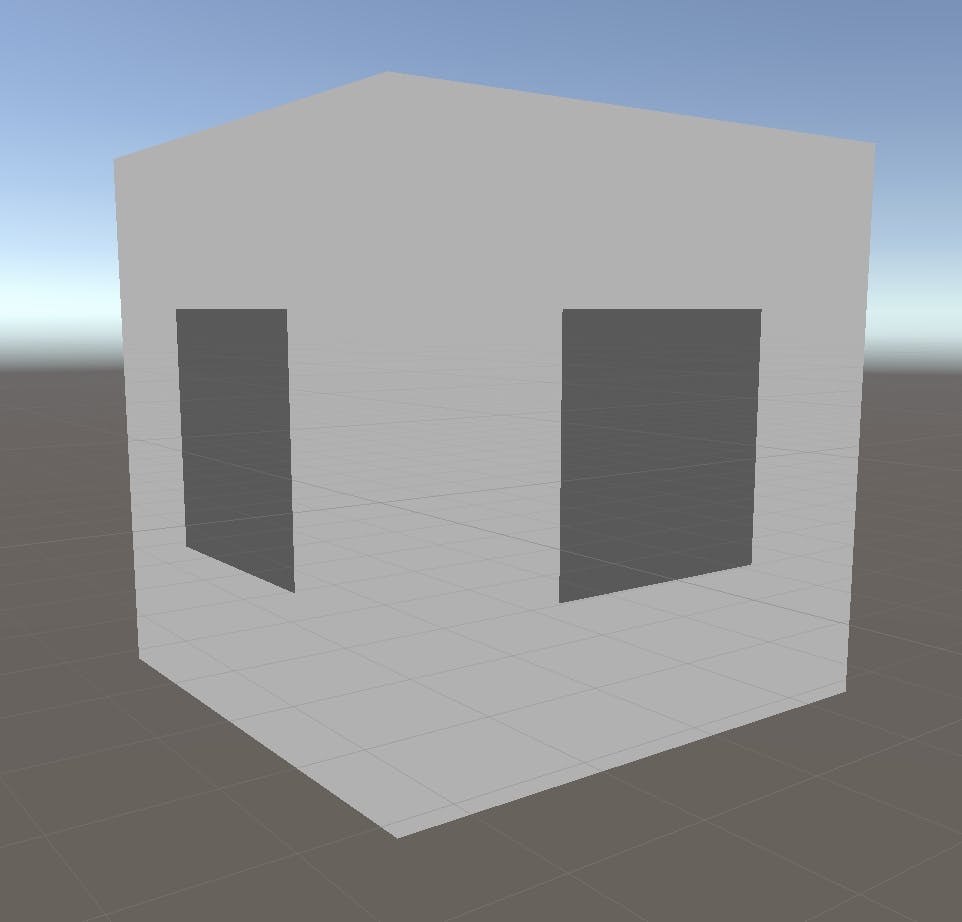

Culling - When we have a 3d model of a cube we know that the sides of the cube which are facing away from our view cant be seen so why bother to draw them? This is where culling (also known as back face culling) comes in, it's the act of reducing the number of vertices that need to be rendered. This is used as an optimization.

There are several culling tags:

- Back - Don’t render polygons facing away from the viewer (default).:

- Front - Don’t render polygons facing toward the viewer. Used for turning objects inside-out.

- Off - Disables culling - all faces are drawn. Used for special effects.

Rendering Order - Unity provides a render Queue to determine in which order objects are rendered. You can choose in the shader its render Queue. It can also be changed in the material.

Background (1000) - This render queue is rendered before any others. Used for skybox and backgrounds.

Geometry (2000) - Opaque geometry uses this queue.

AlphaTest (2450) - Alpha tested geometry uses this queue. shaders that don’t write to depth buffer should go here (glass, particle effects). On PowerVR GPUs found in iOS and some Android devices, alpha testing is resource-intensive. Do not try to use it for performance optimization on these platforms, as it causes the game to run slower than usual.

GeometryLast (2500) - Last render queue that is considered "opaque".

Transparent (3000) - This render queue is rendered after Geometry and AlphaTest, in back-to-front order.

Overlay (4000) - This render queue is meant for overlay effects .e.g. lens flares.

Opaque geometry, anything rendered between 0 - 2500 is rendered front To back, this way the ZTest is rejecting pixels where the depth has already been written too. This is to reduce the fillrate.

And finally, Unity's sorting doesn't always sort how you expect due to batching, bounds calculations, and some other Unity oddness.

Shader Pass - A shader can have one or more passes. A pass is a request to draw an image using the data that is passed to the shader. Each pass receives the content of the previous pass.

In cases like an outline shader, we want to have more than one pass. One to draw the object and another one to draw the outline which is the object larger in a single color and rendering its front face.

Shader graph doesn't support multi-pass shaders.

KeyWords -

Keywords are useful for many reasons, such as:

- Creating shaders with features that you can turn on or off for each Material instance.

- Creating shaders with features that behave differently on certain platforms.

- Creating shaders that scale in complexity based on various conditions.

Types Of Shader Programs

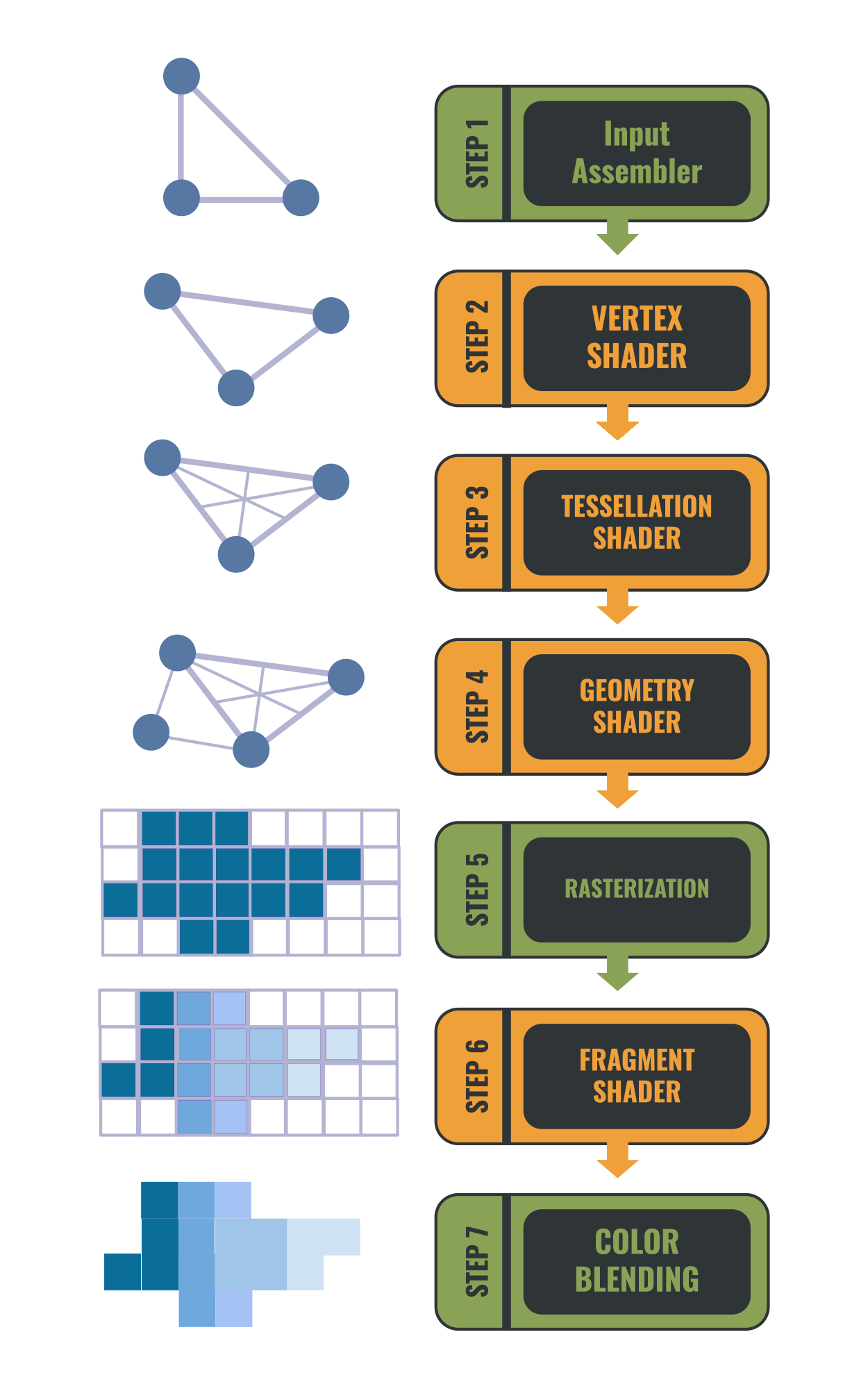

Vertex shader - All the data about the mesh that needs to be rendered is passed to the vertex shader also known as the vertex function. The vertex shader is responsible for the calculation of the vertex position in screen space. It can manipulate the mesh data for creating special effects. It can change the mesh position, color, vertex Normals, and texture mapping.

Tessellation shaders - Are used to improve the quality of geometry by subdividing meshes according to rules. This is used to improve the look of things when you get up close to them like floors and walls.

Geometry shader - The geometry shader is the step between the vertex shader and the fragment shader. It receives the vertex data and then allows you to manipulate it by generating new vertices or removing existing ones. This is used in cases like the generation of grass or fur. Note that geometry shaders have notoriously bad performance and are rarely used anymore.

Fragment shader - The fragment shader is the last stage of the shader programs, it is also known as the pixel shader. It runs on each fragment which has a polygon covering it and chooses what color to display.

[Compute shader](docs.unity3d.com/Manual/class-ComputeShader..) - Compute shaders are unique in the fact that they can run outside the rendering pipeline. So they can be used to do calculations in parallel using the GPU power.

Shader Performance

There are several ways to optimize your shaders. To understand what will optimize our shader we need to remember that a shader is a function that does calculations, the less complex the calculation the faster and more optimized it will be.

Precision

The first thing we can do to reduce the complexity of the calculation reduces the precision of the calculations. Shaders do math calculations on floating point numbers. We can choose the precision of the calculations. We can choose either Floating precision which uses 32 bits or Half precision which for its name's sake is 16 bits. Using the half precision is faster due to improved GPU register allocation or due to "fast path" execution units for some lower precision math operations. The use of lower precision uses less power from the GPU and can increase battery life. If you build for PC in unity it makes no difference what precision you choose as it will always compute to 32-bit precision. The place where the precision does matter is on mobile GPUs.

Expansive functions

Some math mathematical operations are very expansive. For example pow, exp, log, cos, sin, tan. These are Transcendental mathematical functions that may look simple but are very complex to calculate. As we said the less complex the calculation is the faster the shader, so avoid functions like this when possible. A trick that can be used as an alternative is baking calculations as look up tables on textures.

Branches are expansive

Shader programs are stupid in a sense. In C# if you have an "if else" statement you only calculate either the if logic or the else logic, but shaders will calculate both and only use the result that is needed. This means that branches in shaders are expansive. To avoid this we can use Keywords. Keywords look like branches but what they do is create shader variants. One shader variant for the if true and one for the if false in this case.